Making a multi-proxy Minecraft queue system using Redis

The waiting room⌗

Popular Minecraft servers often have to deal with lots of concurrent players, which requires powerful and flexible infrastructure. Anybody who has played Minecraft before will have noticed that large servers (such as Hypixel) do not have a “survival” gamemode similar to vanilla minecraft; this is because a minecraft world can only be hosted on a single server at a time1 and each server cannot handle more than a couple hundred players. 1: There are workarounds such as MultiPaper, but most of them are not stable enough

However, there are networks that do offer a vanilla-like experience, which obviously has a maximum amount of players. Sometimes, servers get full and players are not able to join until someone else leaves; this is painful for the user, as they have to constantly reconnect until they get in.

In these cases, most servers have implemented a queue system, in which players join a “waiting room” first and get moved to the actual server when a slot is available. Waiting rooms are basically stripped-down Minecraft servers that have no features and therefore can handle large amounts of players. A great example of this is 2b2t, which usually has a queue so long that it can take hours to get in.

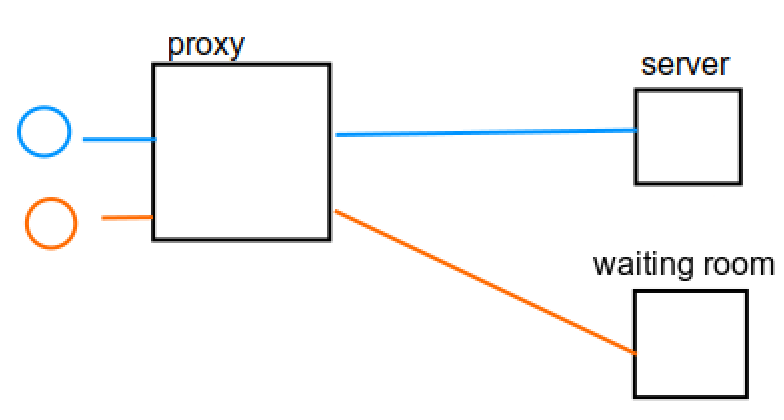

Queue plugins are really simple, when players connect to the server, they first connect to a proxy. This proxy then checks if there is enough space on the server; if not, it connects the player to the waiting room and adds them to the end of a list. When a server slot becomes available, the proxy moves the first player in the list to the server.

A proxy is just an intermediary between a player and a server. It allows players to switch servers without having to disconnect and return to the server selection menu.

This model is usually good enough for medium sized networks, it can be adapted to have multiple servers with multiple queues and waiting rooms since the system is managed by the proxy.

Multiple proxies⌗

Large networks might want to use multiple proxies to improve performance and distribute the load. This could impose a problem to our design, as we relied on a single proxy instance to hold the queue data locally. In a multi-proxy network, players might be connected to proxy 1 or proxy 2, which means we need to adapt the system accordingly.

We could make it so that players who want to queue for server A have to go through proxy 1, but that would certainly disrupt user experience. The point of using a proxy is that players are able to switch servers seamlessly, if they were connected to proxy 2 they would have to manually connect to proxy 1.

Other idea is to make different queues for each proxy, but that would only cause race conditions and deadlocks. For instance, if 100% of players in server A are connected through proxy 1, players that are in the proxy 2 queue would not be able to join, since proxy 2 cannot detect when someone leaves server A.

The solution: Redis⌗

Redis is a in-memory database, meaning that it stores data in RAM as opposed to other databases; this means it has high read/write speeds. Even more, it works as a message broker, which is useful for what we want to achieve.

We can store the queue information in Redis, that way it can be accessed from all proxies. Since Redis is a key-value database (and therefore a NoSQL database) we can just take the queue (a list of UUIDs), serialize it to json and store it.

Public enemy No. 1: Race conditions⌗

As you might have realised, the issue with this model is that two or more proxies might want to modify the same queue at the same time. This could mean a data loss. This is an example of such a scenario:

- Queue for survival is empty, but the server is full

- Player Bob is in the lobby, connected through proxy 1

- Player Alice is also in the lobby, but connected through proxy 2

- Bob tries to join survival

- Proxy 1 reads the queue for survival from redis

- Alice tries to join survival

- Proxy 2 reads the (still empty) queue for survival from redis

- Proxy 1 adds Bob to the queue (which is empty) and writes the serialized list to redis

- Proxy 2 adds Alice to the queue (which is also empty) and writes the serialized list to redis, overwriting previous data

- Only Alice is in the queue ppend elements to a list

But wait… what about Redis lists?⌗

Redis has three data types: Strings, List of strings and Sets.

We were trying to store the queue as a string which is a serialized java list, but if we stored each player as an element of a Redis list that would solve the issue. When a player joins the queue the proxy just sends a list add command to Redis which does not overwrite existing data. Then, when someone connected through the server through proxy 1 leaves, proxy 1 takes the list and moves the first person to the server (removing them from the list). If said player was not connected through proxy 1, we can send a broadcast through redis asking other proxies to move the player; the corresponding proxy will find the player and move them.

Queue priority⌗

Many servers offer queue priority for donors, meaning that people who have purchased a rank get placed at the top of the list. If there are five regular players in the queue and someone with a “VIP” rank joins, they will be placed in the first position; if another “VIP” user were to join, he would be placed in the second position, and so on.

This is not compatible with our model, as Redis cannot check which player has higher priority, so that has to be done by the proxy. Unfortunately, the plugin would still have to read the list before modifying it.

Resource locking!⌗

Let’s jump back to our original where we use serialized lists. We need proxies to be able to tell each other “wait! don’t do anything with this list while I’m modifying it!", and resource locks might be just what we are looking for.

Before modifying a list, like server.survival.queue, we will first try to read server.survival.lock to see if it is set or not. In case it is, we will have to wait until the other proxy finishes changing the list; but if it is not set, we can set it ourselves to make sure other proxies are aware and then modify the list.

But what if two proxies read it at the same time?⌗

You might be thinking that a race condition could occur here; two proxies might read the database at the same time and think there is no lock, then both would set it thinking they can change the list.

Actually, Redis has a “set if not exists” command (SETNX) that solves this issue for us. We do not have to read server.survival.lock before trying to set it, because we can just try to write to it using SETNX; Redis will then tell us if it was written or not. If it was, it implies there was no lock before; if it was not, there was a lock already set. In case we were not able to acquire the lock, we can just retry later.

Proxy crashes and stuck threads⌗

To prevent a proxy from acquiring the lock, crashing and essentially locking the resource eternally we can add expiration to the SET command. This way, when the lock is set, if it is not removed after the amount of time we said it will automatically be deleted by Redis.

Even though the lock expires automatically, we want to release it as soon as we finish changing the list, so other proxies can modify it as soon as possible. We cannot just delete the lock directly, because if the proxy got stuck for a while and our lock expired automatically, we might be messing with another proxy’s lock.

To solve this, we can set a lock to a random and unique value. We can store that value locally and once we want to release the lock, we will only delete it if the value it holds matches the value we set it to. This would normally require two separate calls, but fortunately redis has an EVAL command which allows us to run lua on the redis server directly.

Using EVAL, we can send the following script:

if redis.call("get",KEYS[1]) == ARGV[1] then

return redis.call("del",KEYS[1])

else

return 0

end

This will cause the lock to be deleted only if it was the same lock we set ourselves, preventing us from accidentally deleting another lock.

Conclusion⌗

Despite the difficult nature of having multiple process accessing shared resources, creating a queue system that can scale with multiple proxies is perfectly achievable, and in fact, quite straightforward. The part that interacts with the proxy’s API is rather simple and minimal.

Coincidentally, I wrote this blog at the same time I am making a queue plugin, which I hope I can finish and publish as an open source project for my portfolio. It might not be suitable for actual production environments, but I am looking to release it more as a proof of concept than anything.

As a side note, I would like to point out that I am using Kotlin for the first time. Although the syntax can be weird at first, it is quite comfortable to work with since it has null checks and other features that make code less verbose in comparison to Java. Once I finish the plugin I might write a follow up article on this as well!